Feeling Deja Vu?

If the current state of artificial intelligence gives you a strange sense of familiarity, you’re not alone. The non-stop hype, the firehose of venture capital, and the frantic scramble to build the “next big thing” feel less like a new revolution and more like a rerun of the late 1990s. While the comparison isn’t perfect, the patterns of behavior are undeniable. Today’s AI landscape shows strong echoes of the internet circa 1996: the basic technology is becoming reliable, the advanced applications are a chaotic mess, and a staggering amount of money is being spent on visions the technology can’t yet support.

HTML and Core AI

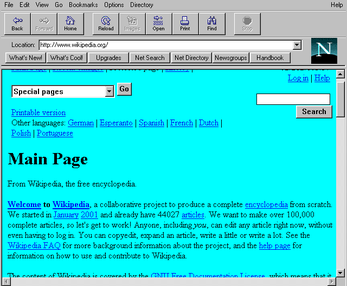

Back in the mid-90s, the web had a solid, dependable foundation: HTML. It was simple, it was for sharing information, and it just worked. You could build a website that would (mostly) load reliably on Netscape Navigator or Internet Explorer, and it would solve a clear problem. It was a well-understood technology that delivered tangible value.

This is where we are with many core AI use cases today. Generative AI for tasks like chat, language translation, and summarization is largely a solved problem. Like HTML, these tools are becoming commodities we take for granted. They provide real value, are relatively easy to implement, and address well-defined needs. They are the solid ground upon which everything else is being built.

The Agentic Gamble

But then there was the “advanced” stuff. In the 90s, the dream of a dynamic, interactive web led to a chaotic explosion of competing technologies. Microsoft’s JScript was locked in a battle with Netscape’s JavaScript. Sun Microsystems pushed Java Applets, which promised rich applications inside the browser but delivered slow, insecure experiences over 28.8k modems. Others tried to build entire application interfaces with proprietary languages like Mozilla’s XUL (I should know; I built a POS system with XUL). It was a frenzy of brilliant ideas, all pulling in different directions.

This is precisely the situation with AI agents today. To make models do more than just talk, we need them to act. This has led to a Cambrian explosion of agentic frameworks with no clear winner. Anthropic argues for multi-agent systems where teams of specialized AIs collaborate on complex tasks. Cognition Labs argues for single-agent systems designed for long-term, continuous context. We have hybrid models, new prompting techniques, and entirely different architectures all vying for dominance. Just like in 1996, it’s a confusing but exciting landscape of competing philosophies, with everyone convinced their path is the right one.

Wasting Your Money

Of course, the most painful parallel to the 90s is the money. The dot-com mantra was “get big fast,” which fueled spectacular failures. The poster child was Webvan, the online grocery delivery service that famously spent $1 billion on high-tech warehouses before it had the customer base or last-mile logistics to make the model work. The idea wasn’t wrong—we all buy things online now. The vision was just years ahead of the infrastructure.

We are seeing the same pattern play out with a certain class of AI gadgets. The Humane Ai Pin, which raised over $230 million, and the Rabbit R1 are the Webvans of our time. They were sold on a compelling vision of ambient, screenless AI interaction. But the products that shipped were panned as slow, unreliable, and hobbled by poor battery life.

The error here isn’t the vision. The error is building and shipping a product today that depends the technology of tomorrow. And this reveals a fundamental misunderstanding of how to build sustainable innovation.

If you are building an AI product, you need clearly defined, measurable metrics against a baseline. Without them, you are flying blind. You have no way to know if you’re making progress, and no data to tell you when you need to pivot. You’re just burning cash on hope. I’m seeing a lot of companies without those metrics building agent-based systems where they had no clear metrics and, desperately clinging to the sunk cost fallacy, by the time they realize they’re losing too much money, they’ve flushed a lot of value down the toilet. Those metrics could have signaled much earlier that things were going awry.

It’s not that ROI is missing - it’s that we don’t yet have the right frameworks to measure it.

— Hasit Trivedi, chief digital and AI officer at Firstsource

With respect to Mr. Trivedi, that’s not entirely true. We know how to measure business value, we’ve just forgotten to tie AI to existing business metrics. Yes, there are open questions about potential, but we can measure their impact now.

However, if you’re building an AI strategy and you don’t have AI expertise, you want a “crawl, walk, run” strategy.

- Crawl: Start with the core, reliable AI use cases—the “HTML” of our time. Automate an internal workflow, improve an existing customer service process, generate marketing copy. These projects deliver immediate, measurable value and build organizational competence.

- Walk: Use the success and data from the crawl stage to tackle more complex, but still achievable, initiatives.

- Run: This is where you explore the bleeding edge. A wise strategy acknowledges that by the time your company is ready for the “run” stage, the core AI technology will have advanced significantly. Choices that seemed brilliant two years prior might be obsolete by the time you have to confidence to start on them.

For that last point, you don’t bet your entire company on going to “run” from a standstill when you don’t even know if you can walk.

The builders of the AI gadgets tried to sprint from a standing start. They built for a future where LLMs were faster, cheaper, and more reliable. But that future isn’t here yet. The lesson from Webvan isn’t that bold visions are bad. It’s that you can’t build a business on a foundation that doesn’t exist. Real progress is disciplined, iterative, and, above all, measurable.

Afterward: Finding Those Metrics

OK, rant done. That’s it. You can stop reading now.

What, you’re still here? Wondering about those metrics?

If you’re one of those people who struggle to figure how to measure the value of a business project, don’t feel bad. You’re not alone. Many people struggle with this. I try to convince them to read the excellent book How to Measure Anything by Douglas Hubbard, but they don’t. So I took a different approach.

I used the process outlined in the book to develop The Business Measurement Mentor , available in ChatGPT. However, if you don’t have an account there, or you’d rather use that prompt somewhere else, I’ve also published the core prompt . You provide it with the business decision you’d like metrics for and it will walk you through a multi-step process to refine your knowledge and provide you with the metrics you need.

There is, however, a cheat code. Let’s say your marketing department wants access to ChatGPT, Claude, or Gemini, with the express intent of letting them “brainstorm” wild marketing ideas and the resources needed to implement them. That’s cool, but how do you develop metrics for that, when you’re not even sure what’s going on? You can use my measurement mentor for that, but tell it, “I don’t have time to answer your questions, so give me several measurable metrics I might be able to use for [describe business decision here]“.

Here’s what I got, using the marketing department example.

Understood. While I typically guide step-by-step, I’ll adapt to your request and provide a list of measurable metrics tied to the potential value and cost of giving your marketing team access to AI tools for brainstorming and idea implementation.

These metrics can help you evaluate whether the access leads to better or faster marketing innovation, and what resources it consumes.

✅ Potential Benefit Metrics

These focus on outputs from AI usage:

Number of New Campaign Concepts Generated per Month

- Baseline vs. after AI access.

Time from Idea Generation to First Draft Campaign

- Compare before vs. after using AI tools.

Campaign Success Rate

- % of AI-assisted campaigns that outperform a defined benchmark (e.g., 10% lift in engagement or conversion).

Lift in Key Marketing KPIs

- E.g., increase in click-through rates (CTR), engagement, lead conversion tied to AI-assisted campaigns.

Usage Rate of AI Tools

- % of marketing team using the tools weekly/monthly.

⏳ Cost and Resource Metrics

These show what it costs to enable AI brainstorming:

Average Time Spent Using AI Tools per User per Week

AI Licensing Cost per User per Month

Average Time Saved on Campaign Ideation or Drafting Tasks

- Self-reported or time-tracked reduction.

Support/Troubleshooting Requests per Month

- Measures friction or need for training.

🎯 Risk or Quality Control Metrics

To avoid misalignment or wasted effort:

% of AI-Generated Ideas Implemented

- Too low could mean poor quality; too high might mean insufficient vetting.

Internal Rating of Idea Quality

- Team leads score a sample of AI-generated ideas on a defined rubric.

If you later want to prioritize these metrics or link them to a decision (e.g., ROI on licenses), I can help walk through that with you. Would you like a quick scoring model or dashboard template to use with these?

If you can’t develop measurable metrics from this, perhaps consider a career move.