The Devil went down to Georgia.

— “Devil Went Down To Georgia”—The Charlie Daniels Band

He was lookin' for a soul to steal.

He was in a bind ‘cause he was way behind.

He was willing to make a deal.

Our client had just won a nice contract but were in a bind. Their legacy codebase, while powerful, was slow. They could not process more than 39 credit card transactions per second. They needed to get to 500 transactions per second for an event lasting 30 minutes. Because the event was highly publicized, there was a tight deadline. They had two weeks to get a proof of concept running, improving their performance by an order of magnitude.

They turned to our company, All Around the World , because we have a proven track record with them.

We had a senior systems architect, Shawn, and a senior software architect, Noel, on the project. Our managing director, Leïla, oversaw the project and ensured that if we had questions, she had answers. I was brought in from another project because there was simply too much work to do in two weeks. Fortunately, though I didn’t know the project, Shawn and Noel knew the system well and helped get me up to speed.

The Constraints

There were several key constraints we had to consider. Our overriding consideration was ensuring that PCI-compliance (Payment Card Industry compliance) was strictly adhered to to ensure that customer data was always protected. Second, the client had developed an in-house ORM (object-relational mapper) many years ago and like all projects, it grew tremendously. While it was powerful, it was extremely slow and had a lot of business logic embedded in it. Third, because we only had two weeks for the first pass, we were given permission to take “shortcuts”, where necessary, with the understanding that all work was to be thoroughly documented and tested, and easy to either merge, remove, or disable, as needed.

Finally, we could change anything we wanted so long as we didn’t change the API or cause any breaking changes anywhere else in the code. There was no time to give customers a “heads up” that they would need to make changes.

Getting Started

Because the event only lasted 30 minutes, whatever solution we implemented didn’t have to stay up for long. This also meant that whatever solution we implemented had to be disabled quickly if needed. We also knew that only a few customers would use this new “fast” solution, and only one payment provider needed to be supported.

Noel immediately started tracing the full code path through the system, taking copious notes about any behavior we would need to be aware of. Shawn was investigating the databases, the servers, the network architecture, and assessing what additional resources could be brought online and tested in two weeks.

I, being new to the project, started by taking a full-stack integration test representing one of these transactions and studied it to learn the code and its behavior. In particular, I wanted to better understand the database behavior as this is often one of the most significant bottlenecks.

I was particularly concerned because the client was in the midst of a large migration from Oracle to PostgreSQL, so making changes at the database level was not an option. We needed to know immediately if this was one of the bottlenecks. I wrote code which would dump out a quick summary of database activity for a block of code. It looked sort of like this:

explain dbitrace(

name => 'Full stack transaction request',

code => sub { $object->make_full_request(\%request_data) },

save => '/tmp/all_sql.txt',

);

The summary output looked similar to this:

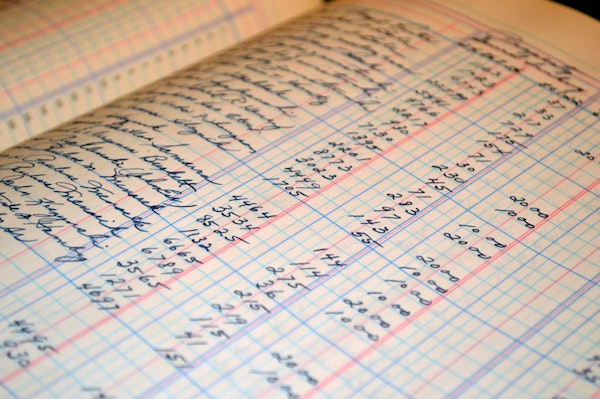

{

report => {

name => 'Full stack transaction request',

time => '3.70091 wallclock secs'

},

sql => {

delete => 1,

insert => 7,

select => 137,

update => 32,

total => 177,

}

}

We had a problem. Even our client was surprised about the amount of database activity for a single “buy this thing” request.

None of the database activity was particularly slow, but there was a lot of it. Deadlocks weren’t an issue given that the changed data was only for the current request, but the ORM was killing us.

The Tyranny of ORMs

I love working with a good ORM, but ORMs generally trade execution speed for developer speed. You have to decide which is more important to you. Further, in two decades of working with ORMs, I have only once seen an in-house ORM which was on-par with, or superior to, commercial or open source products. And it was an ORM optimized for reporting, something many ORMs struggle with.

For our client’s ORM, every time a request was made it would gather a bunch of metadata, check permissions, make decisions based on whether or not it was using Oracle or PostgreSQL, check to see if the data was cached, and then check to see if the data was in the database. Instantiating every object was very slow, even if there was no data available. And the code was creating—and throwing away without using—hundreds of these objects per request.

We considered using a “pre-flight” check to see if the data was there before creating the objects, but there was so much business logic embedded in the ORM layer that this was not a practical solution. And we couldn’t simply fetch the data directly because, again, the ORM had too much business logic. We had to reduce the calls to the database.

After an exhaustive analysis, we discovered several things.

- Some of the calls were for a “dead” part of the system that no one really remembered but was clearly unused.

- Numerous duplicate calls were being made to unchanging data. We could cache those objects safely.

- A number of calls were being made for important data that wasn’t relevant to our code path, so we could skip them.

Our first step at addressing the problem was to ensure that everything was wrapped in configuration variables that allowed us to easily turn on or off different code paths for our project. Fortunately, the client had a system that allowed them to update the configuration data without restarting the application servers, so this made our choice much safer.

Once that was in place, our first pass cut our SQL calls roughly in half, tremendously sped up the code, and the tests still passed. But we were nowhere near 500 transaction per second.

Caching

It’s said that the three most common bugs in software are cache invalidation and off-by-one errors. And we had caching problems in spades.

We couldn’t afford cache misses during the event, so we needed to “preheat” the caches by ensuring all information was loaded on server startup. Except that we had a few problems with this.

First, it was slow because there was a lot of data to cache. If we needed to rapidly bring new servers online during the event, this was not an option.

Second, it consumed a lot of memory and we were concerned about memory contention issues. Disk swapping was not an option.

Third, the app caches primarily used per-server memcached instances, but the data was global, not per-server, thus causing a lot of unnecessary duplication. Shawn already knew about this, so one of the first things he did was set up a secure Redis cluster with failover to replace memcached, where appropriate. Since we only had to heat the cache once, bringing up servers was faster, and we significantly reduced per-server memory consumption.

The application itself also used heavy in-memory caching (implemented as hashes), which we replaced with pre-loaded shared cache entries, thereby lowering memory requirements even further.

As part of this work, we centralized all caching knowledge into a single namespace rather than the ad-hoc “per module” implementations. We also created a single configuration file to control all of it, making caching much simpler for our client. This is one of the many features we added that they still use today.

SOAP

Another serious bottleneck was their SOAP server. Our client made good use of WSDL (Web Services Description Language) to help their customers understand how to create SOAP requests and SOAP was integral to their entire pipeline. The client would receive a SOAP request, parse it, extract the necessary data, process that data, create another SOAP request, pass this to back-end servers, which would repeat the process with the new SOAP request.

SOAP stands for “Simple Object Access Protocol.” But SOAP isn’t “objects”, and it’s not “simple.” It’s a huge XML document, with an outer envelope telling you how to parse the message contents. Reading and writing SOAP is slow. Further, our client’s SOAP implementation had grown over the years, with each new version of their SOAP interface starting with cutting-and-pasting the last version’s code into a new module and modifying that. There were, at the time of the project, 24 versions, most of which had heavily duplicated code. To optimize their SOAP, we were facing a problem. However, we dug in further and found that only one version would be used for this project, so our client authorized us to skip updating the other SOAP versions.

We tried several approaches to fine-tuning the SOAP, including replacing

many AUTOLOAD (dynamically generated)

methods with static ones. In Perl, AUTOLOAD

methods are optional “fallback” methods that are used when Perl cannot find a

method of the desired name. However, this means Perl must carefully walk

through inheritance hierarchies to ensure the method requested isn’t there

before falling back to AUTOLOAD. This can

add considerable overhead to a request. For this and other reasons, the use of

AUTOLOAD is strongly discouraged.

Replacing these methods was extremely difficult work because the AUTOLOAD methods were used heavily, often calling

each other, and had grown tremendously over the years. Many of them were

extremely dangerous to pick apart due to their very complex logic. We managed

to shave some more time with this approach but stopped before we tackled the

most complicated ones. There was only so much risk we were willing to

take.

Noel, in carefully reading through the SOAP code, also found several code paths that were slow, but didn’t apply to our requests. Unfortunately, we could not simply skip them because the SOAP code was tightly coupled with external business logic. Skipping these code paths would invariably break something else in the codebase. What he proposed, with caution, is the creation of a special read-only “request metadata” singleton. Different parts of the code, when recognizing they were in “web context”, could request this metadata and skip non-essential code paths. While singletons are frowned upon by experienced developers, we were under time pressure and in this case, our SOAP code and the code it called could all consult the singleton to coordinate their activity.

The singleton is one of the many areas where deadlines and reality collide. Hence, the “Devil Went Down to Georgia” quote at the beginning of this case study. We were not happy with this solution, but sometimes you need to sacrifice “best practices” when you’re in an emergency situation. We alerted our client to the concern so they could be aware that this was not a long-term solution.

We also leveraged that singleton to allow us to alert the back-end that encrypted transaction data was available directly via Redis. The back-end merely needed to decrypt that data without the need to first deserialize SOAP and then decrypt the data. Another great performance improvement. Sadly, like many others improvements, it was specific to this one customer and this one payment provider.

However, this approach allowed us to remove even more SQL calls, again providing a nice performance boost.

Tax Server

By this time, with the above and numerous other fixes in place, we were ready to do our initial QA runs. We didn’t expect to hit our 500 TPS target, but we were pretty confident we had achieved some major speed gains. And, in fact, we did have some pretty impressive speed gains, except that periodically some of our requests would simply halt for several seconds. Due to the complicated nature of the system, it wasn’t immediately clear what was going on, but we finally tracked down an issue with the tax server.

Our client was processing credit card transactions and for many of them, taxes had to be applied. Calculating taxes is complicated enough that there are companies that provide “tax calculation as a service” and our client was using one of them. Though the tax service assured us they could handle the amount of traffic we were sending, they would periodically block. It wasn’t clear if this was a limitation of their test servers that we would avoid with their production servers, but we could not take this chance.

We tried several approaches, including caching of tax rates, but the wide diversity of data we relied on to calculate the tax meant a high cache miss rate, not allowing us to solve the problem. Finally, one of our client’s developers who knew the tax system fairly well came up with a great solution. He convinced the tax service to provide a “one-time” data dump of tax rates that would not be valid long but would be valid long enough for our short event. We could load the data into memory, read it directly, and skip the tax server entirely. Though the resulting code was complicated, it worked, and our requests no longer blocked.

The Big Test

With the above, and many other optimizations, we felt confident in what we delivered and in a late-night test run, our client was ecstatic. We were handling 700 transactions per second, almost twenty times faster than when we started. Our client hired us because they knew we could deliver results like this. Now it was time for our first production run, using beefier servers and a much faster network.

Cue the “sad trombone” music. We were only running about 200 to 300 transactions per second. As you may recall, the client had been migrating from Oracle to PostgreSQL. The QA servers were far less powerful than the production servers, but they were running PostgreSQL. The production servers were running Oracle. Oracle is the fast, beastly sports car that will quickly outpace that Honda Accord you’re driving ... so long as the sports car has an expert driver, constant maintenance, and an expensive, highly trained pit crew to look after it.

Out of the box, PostgreSQL just tends to be fast. Often companies find that a single PostgreSQL DBA working with competent developers is enough to easily handle their traffic. But we had Oracle. And it wasn’t going away before the deadline. Our first two weeks was up and our proof of concept worked, but greatly exceeding client requirements still wasn’t fast enough.

However, this was good enough for the proof of concept, so it was time to nail this down. It was going to be more nights and weekends for us, but we were having a huge amount of fun.

Oracle wasn’t the only problem, as it turns out. This faster, higher capacity network had switches that automatically throttled traffic surges. This was harder to diagnose and work around but alleviated the networking issues. Interestingly, it was Leïla who spotted that problem simply by listening carefully to what was being said in meetings. She has this amazing ability to hear when something isn’t quite right.

With the use of Oracle being a bottleneck, we had to figure out a way to remove those final few SQL statements. Our client suggested we might be able to do something unusual and it’s based on how credit cards work.

When you check into a hotel and reserve your room with a credit card, your card is not charged for the room. Instead, they “authorize” (also known as “preauthorize”) the charge. That lowers the credit limit on your card and reserves the money for the hotel. They will often preauthorize for over the amount of your bill to allow a small wiggle room for incidental charges, such as food you may have ordered during your stay.

Later, when you check out, they’ll charge your card. Or, if you leave and forget to check out, they have a preauthorization, so they can still “capture” the preauthorization and get the money deposited to their account. This protects them from fraud or guests who are simply forgetful.

In our case, the client often used preauthorization for payments and after the preauthorization was successful, the card would be charged.

It worked like this: the front-end would receive the request and send it to the back-end. The back-end would submit the request for preauthorization to the payment provide and the payment provider would respond. The back-end sent the response to the front-end and if the preauthorization was denied, the denial would be sent back to the customer. However, usually it was approved and the front-end would then submit the charge back to the back-end. The back-end would then submit the preauthorized charge to the payment provider and lots of network and database activity helped to keep the server room warm. The entire process was about as fast as a snail crawling through mud.

What the client pointed out is that the money was reserved, so we could charge the card after the event because we had already preauthorized it. Instead, we could serialize the charge request and store it in an encrypted file on the front-end server (with the decryption key unavailable on those servers). Later, a utility we wrote read and batch processed those files after the event, allowing us to finish the transaction processing asynchronously.

This worked very well and by the time we were done, our changes to the code

resulted in a single SQL SELECT statement. Further, after this

and a few other tweaks, we were easily able to hit 500 transactions per second

on their production system. When the event happened shortly after, the code

ran flawlessly.

What We Delivered

Overview

Increase the performance of Business Transactions from 39/sec to 500+/sec.

The overall implemented solution could not change the outward facing API calls as end users of the service were not to be impacted by back-end changes. The solution could also not effect the traditional processing as clients would be issuing both traditional and streamlined transactions.

New Caching Architecture

Implement a new redundant shared caching architecture using Redis as a data store, to replace the existing on application server memcached instances.

Update existing application code to remove the use of internal application caches (implemented as hashes), and replace with pre-loaded shared cache entries.

The new caching system caches items using both the legacy and the new caching system, so that production systems can be transitioned to the unified caching system without downtime.

Allowed a cache storage back-end to define its storage scope, so that caches can be managed based on the storage locality:

- “process”: cache is stored in local per-process memory

- “server”: cache is stored on the local server, and shared by all processes on the server

- “global”: cache is stored globally, and shared by all processes on any server

Data Storage Performance

Create an additional data storage path that replaces storing intermediate data in the back-end shared database with the shared caching architecture.

Remove the use of a third party provider API call, and replace with a static table lookup.

Implement Staged Processing

As data processing in real time is not a requirement, add a data path that allows for separation of the front end API responses from the third party back-end processing. This allowed for heavy back-end processes to be deferred and processed in smaller batches.

Process Streamlining

Worked closely with the client to determine which parts of the process are required during the processing, and which can be bypassed to save overhead.

Code review and clean up. Refactor code base to remove extraneous or ill-performing legacy code.

Repeatable

The process was re-engineered to be configurable allowing the same pipeline to be reimplemented for future events/customer requirement through simple documented configuration options, while not impacting traditional processing.

Caveat

This information was compiled from our reports during this project and not written while the project was ongoing. Some events may not have happened in the exact sequence listed above.